Transparency in a real-time global illumination model for atmospheric scattering in a deferred rendering pipeline for camera-based ADAS tests with HIL simulators

Masterarbeit von Marius Dransfeld

Betreuung: Sabrina Heppner M.Sc.

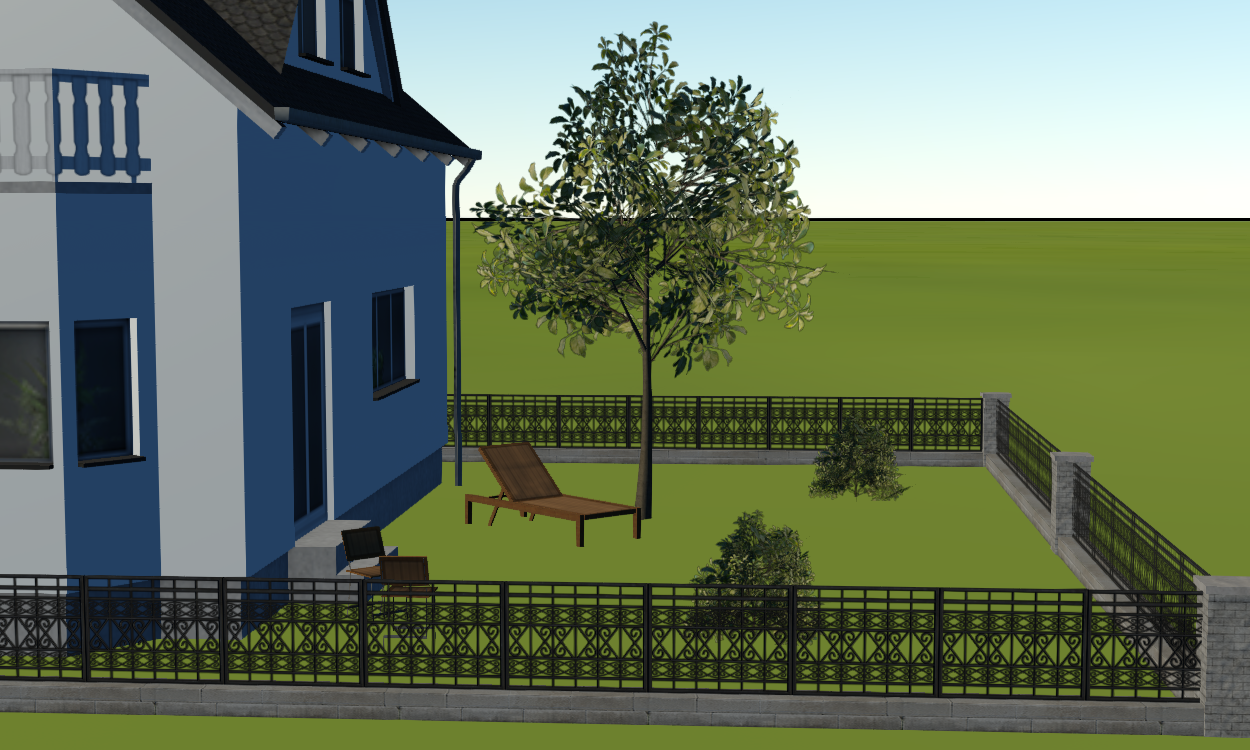

Camera-based Advanced Driver Assistance Systems (ADAS) have gained increasing importance in the last years. For example they are evaluated in Euro NCAP tests, a European car safety performance assessment program, and are required to achieve the highest rating of five stars. Instead of performing test drives with real cars, camera-based ADAS systems are tested with hardware-in-the-loop simulators. These provide a three-dimensional visualization of the simulation environment which is fed to the tested camera with the connected IPU (Image Processing Unit). To ensure the virtual tests create results as closely as possible to the results of real test drives it is important that the rendering is realistic and in realtime. A global illumination model for atmospheric scattering is one possibility to create realistic direct and indirect illumination. To ensure rendering at real-time frame rates a deferred rendering pipeline can be used. Another important aspect to increase realism is the correct rendering of transparent objects, e.g. windows of cars and buildings, inside the virtual test scenes. This thesis builds upon an existing implementation of a deferred rendering pipeline that integrates atmospheric scattering for sunlight. The initial implementation is unable to render transparent objects correctly, as standard deferred rendering is inherently incompatible with transparency (see Figure 1 & 2). The goal of this thesis is therefore to improve the visual quality of the renderings by extending deferred rendering with support for transparent objects.

As a first step the usage and problems of transparency in computer graphics in general are examined. Then several techniques that enable a deferred rendering pipeline to include transparency are reviewed. The three most promising methods are chosen and implemented in standalone prototypes. In a last step these are evaluated and compared with regard to performance and image quality to determine the technique that is most suitable to use in future ADAS tests. Figure 3 & 4 shows the rendering results of the same scenes to show the improvements over the previous approach.

This Master Thesis was accepted into 5th Annual Faculty Submitted Sutend Work Exhibit at SIGGRAPH 2016. The submitted video can be seen here.